A z-Tree Course

1: Introduction to Experiments in Economics

Matteo Ploner

Università degli Studi di Trento

Making Inferences

Causal Inference

- Knowledge progresses by finding causes of phenomena

- e.g., Human activity $\Rightarrow ? \Rightarrow$ global warming

from "An Illustrated Book of Bad Arguments" by Ali Almossawi

from "An Illustrated Book of Bad Arguments" by Ali Almossawi

Hypothesis Testing

- A description of Theories, Phenomena, and Data (Guala, 2005)

- Theories explain Phenomena

- Phenomena organize data that are messy, suggestive and idiosyncratic

- Data do not need a theoretical explanation, while phenomena do

- Distinction between data and phenomena: two stages of scientific research

- Phase 1: data are organized to identify phenomena

- Phase 2: causes of a phenomena are organized into theories

Perfectly Controlled Experimental Design (PCED)

- A Perfectly Controlled Experimental Design (PCED) represents in an abstract fashion the best way to test causal relations (Guala, 2005)

- A PCED is built around comparison and controlled variation

- Comparison → groups that have been exposed to different conditions are juxtaposed

- Controlled variation → all factors that are not intentionally manipulated should be kept constant across groups (uniformity)

Controlled Variation

- How to obtain a controlled variation?

- Matching

- Units that are identical are assigned to groups in different conditions

- Consider a response variable $Y$

- $Y_t(u)$ is the value of the response that would be observed if the unit were exposed to $t$

- $Y_t(u)-Y_c(u)$ is the causal effect of $t$ (relative to $c$) on $u$

- Causal inference refers to the appraisal of $Y_t(u)-Y_c(u)$

- Fundamental Problem of Causal Inference: it is impossible to observe the value of $Y_t(u)$ and $Y_c(u)$ on the same unit and, therefore, it is impossible to observe the effect of $t$ on $u$

Controlled Variation (ii)

- How to obtain a controlled variation?

- 2. Randomization

- The random assignment of units to groups in different conditions makes the groups identical in expected terms

The identification framework (Newey, 2007)

- $Y_{i1}$ is the observed outcome for subject $i$ who is exposed to the intervention ($D_i=1$)

- $Y_{i0}$ is the observed outcome for subject $i$ who is NOT exposed to the intervention ($D_i=0$)

- The outcome $Y$ for individual $i$ is thus $Y_i=Y_{i0}+(Y_{i1}-Y_{i0})D_i=\alpha_i+\beta_iD_i$, with $\alpha_i=Y_{i0}$ and $\beta_i=Y_{i1}-Y_{i0}$

- The average tretament effect (ATE) is the average of the slope ($\beta$) over the entire population

- This is generally what the experimenter is interested in

- The average tretament effect on treated (ATT) is the average of the slope ($\beta$) over the population of treated ($D_i=1$)

The identification framework (Newey, 2007)

- If

- the mean of $Y_{i1}$ does not depend on the treatment status ($E[Y_{i1}|D_i]=E[Y_{i1}]$)

- and

- the mean of $Y_{i0}$ does not depend on the treatment status ($E[Y_{i0}|D_i]=E[Y_{i0}]$)

- → $ATE=ATT=E[\beta_i]$

- This condition holds when individuals are randomly assigned to treatments

- Being assigned to a treatment does not depend on the output variable $Y_i$

- In field happenstance data, it is likely that $E[Y_{i}|D_i]\neq E[Y_{i}]$ (self selection into a treatment)

- Solved econometrically via instrumental variables or other "selection on observables" techniques.

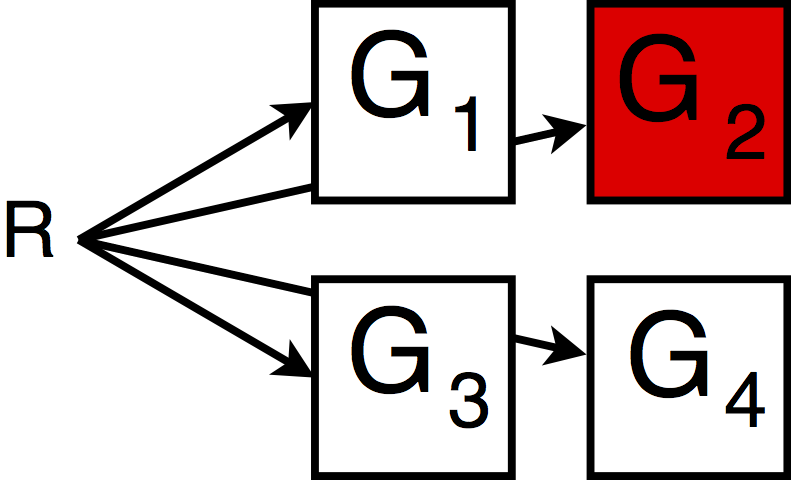

Models of Experimental Design

- Basic models of experimental design (guala, 2005)

- Post-test

- The (causal) effect is given by $Y_{G_1}-Y_{G_2}$

- Pre-test/post-test

- The (causal) effect is given by $(O_{G_2}-O_{G_1})-(O_{G_4}-O_{G_3})$ (diff in diff)

- The Pre-test/post-test allows to control for the impact of measurement

Experimental Economics

Why Running Economic Experiments?

- Roth (1995) identifies 3 main motives for experiments in Economics

- Speaking to Theorists

- Testing predictions originating from formal theories

- The organization of empirical regularities into formal theories

- Searching for Facts (Meaning)

- The study of effects that are not (yet) part of a well-structured theory

- The accumulation of facts that may lead to the creation of new theories (``Searching for Meanings'')

- Whispering to the Ears of Princes

- The formulation of advices for policy makers

Methodological Issues

- Hertwig and Ortmann (2001) identify 4 essential methodological aspect of experiments in economics

- Script Enactment

- Comprehensive instructions reduce risk of ``demand effects''

- Favors replicability

- Repeated trials

- Allows for learning and, potentially, equilibrium convergence

- Performance-based monetary payments

- Proper monetary payments allow to control for the disturbance due to cognitive effort

- Deception

- Deception may induce suspicion among participants and generate uncontrolled reputation spillovers

Validity of Experimental Findings

- Methodological aspect affect the validity of the experiment

- The validity of an experiment can be assessed along two dimensions

- Internal validity

- The extent to which an experiment allows to draw inferences about the behavior in the experiment

- External validity

- The extent to which an experiment allows to draw inferences about behavior outside the experiment

Internal Validity

`Just as we need to use clean test tubes in chemistry experiments, so we need to get the laboratory conditions right when testing economic theory'' (Binmore, 1999)

- To ensure internal validity a proper experimental setting should be implemented

- Examples of dirty tubes

- Bad incentive schemes

- Individuals self-select into treatments

- Deceitful instructions

- Experimenter effects

- Reputation (supergames)

External Validity

- 3 dimensions of artificiality that may impact on external validity of experiments (Bardsley et al., 2010)

- Artificiality of isolation

- The experimental setting may omit relevant features of the external environment

- Findings in the lab may not survive when transferred to the complexity of the real-world

- Artificiality of omission and contamination

- The laboratory environment may bias results (e.g., bad incentives)

- A lack of internal validity produces a lack of external validity

External Validity (ii)

- 3. Artificiality of alteration

- The laboratory alters natural phenomena

- Individuals attach a different meaning to a market in the laboratory and to a real stock market $\rightarrow$ it is impossible to study stock markets in the laboratory

- Artificiality of alteration is the most challenging

Building Blocks

Building Blocks

- Repetition

- One shot vs. repeated trials

- Stationary repetition, conditional task, ...

- Payment scheme

- Random lottery incentive, cumulative payment ...

- Choice Task (stage game)

- Roles, action space, ...

- Matching

- Partner matching, absolute stranger, perfect stranger, ...

Repetitions

- One-shot: the stage game is repeated only $1 \times$

-

Round ID 1 101 102 103 104 - Repeaterd trials: the stage game is repeated $N>1 \times$

-

Round ID 1 101 102 103 104 2 101 102 103 104 3 101 102 103 104 ... 101 102 103 104 - Repetitions can be

- Stationary: same task over distinct rounds

- Dynamic: different tasks over distinct rounds

Incentive-compatibility

- Motivation of participants is controlled via (monetary) rewards $\Rightarrow$ Induced Value Theory (Smith, 1976)

- 3 qualifications of the postulate

- Subjective costs (values) may be non-negligible and interfere with monetary reward

- e.g., cognitive effort may overcome the monetary incentives

- A game value may be attached to experimental (nominal) outcomes (e.g., points or tokens)

- As long as the value function is monotone it does not interfere with induced valuation (reinforcement)

- Individuals may be concerned with others' utility and thus not be independent in their evaluation

- Increasing one's own payoff not necessarily increases utility (e.g., equity concerns)

Payment schemes

- Different payment schemes could be adopted when repeated choices are collected

- Cumulative payment

- All choices are rewarded

- Random Lottery Incentive (RLI)

- One choice is randomly chosen for payment

- Chosen round is communicated to participants only at the end of the expeirment

- The RLI mechanism generates incentive compatible choices as long as participants are EUT

-

It avoids potential confounds due to wealth effects or edging wrt cumulative payment

Choice Task

- Aspects of the choice task that need to be carefully defined

- Roles in the interaction should be assigned to participants

- Usually roles are randomly defined by randomly assign participants to computer terminals

- Action space

- The action space affects the nature of the outcome variable

- Dichotomous data, Count data, Continuous data, String data

- Payoff rule

- Must produce incentive compatible choices

- Important to check that participants understood it

- When tokens are employed a conversion rate should be specified

Data Independence

- Statistical tests should be run on independent observations

- The outcome of one observational unit should not be affected by the outcome of another observational unit

- When choices of one participant interact with choices of another participant their choices are no more independent

- The contagion analogy

- This is true even when the interaction is indirect but mediated by a bridging individual

An Example

| Round | ID | |||

|---|---|---|---|---|

| 1 | 1 | 2 | 3 | 4 |

| 2 | 1 | 2 | 3 | 4 |

- 4 players interact in groups of 2 over two rounds

- Blue and Red

- Thus, the following connections are created

-

ID 1 2 3 4 1 1 2 1 1 3 1 0 1 4 0 1 1 1 - 3 and 2 are not directly connected, but indirectly (via Pl. 4)

- Only one independent observation in the experiment!

- Average choice of the four players

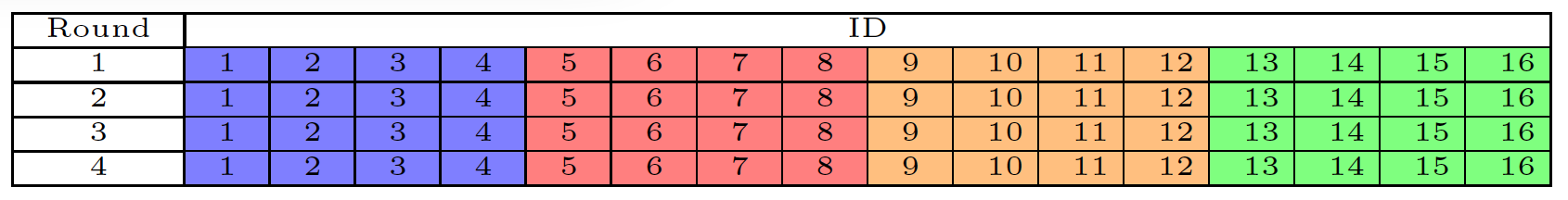

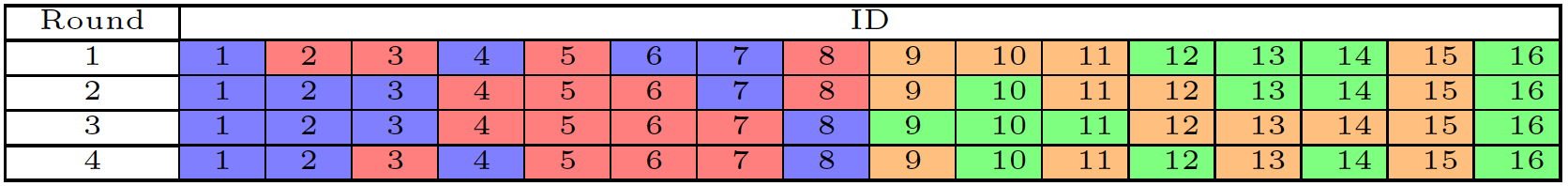

Matching Protocol

- 16 Participants

- ID: 1, 2, ... 16

- 4 groups

-

- Blue, Red, Orange, Green

- 4 rounds

- Round 1, Round 2, Round 3, Round 4

- Each group has 4 participants

Partner Matching

- 4 participants interact in the 4 rounds

-

- Players 1-4 are always in the same (blue) group

- Reutation building

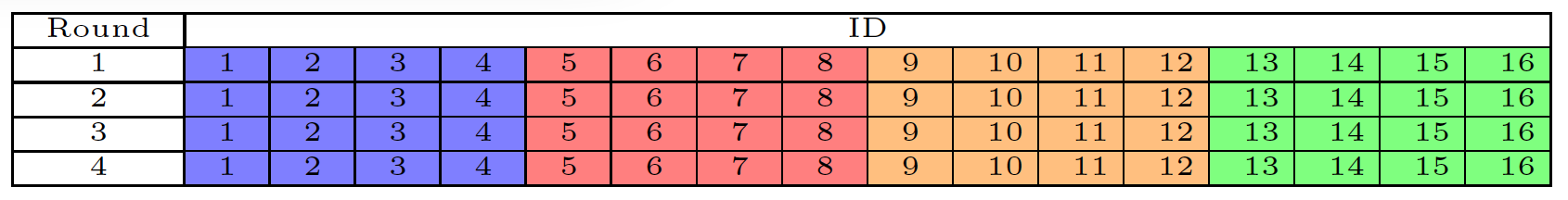

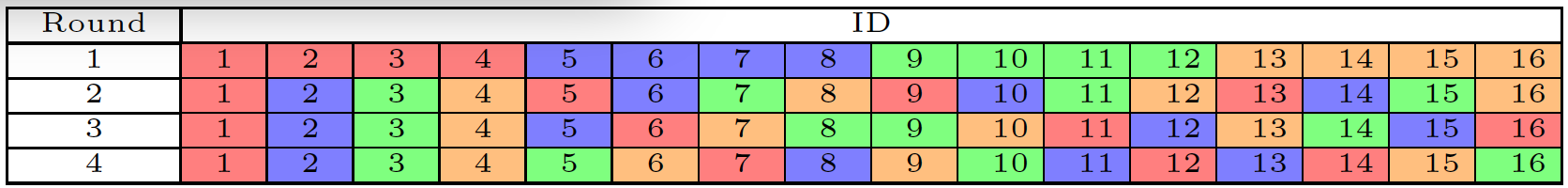

Random Partner Matching

- Each group is made of 4 participants and participants in a group interact together

- At each repetition the groups are formed randomly

-

- e.g., player 1 is in group Blue in rounds 1--3 and in group Green in round 4

- Two players can be matched together in different rounds

- e.g., 1 and 9 are in the same group in rounds 1 and 4

- PRO: simple to implement and to explain; control on reputation concerns

- CONS: the number of independent observations is endogenous and shrinks with repetitions

Random Partner Matching with Matching Groups

- 4 groups: Blue, Red, Orange, Green

- The groups are matched into two subgroups

- [Blue, Red] and [Orange, Green]

- Each group is made of 4 participants and participants in a group interact together

- In each repetition the groups are formed randomly, within the subgroups

-

- Participants in the two subgroups never meet $\Rightarrow$ segregation

- A way to increase the number of independent observations with random matching

Absolute Stranger Matching

- Two participants never meet twice

-

- PRO: Perfect control about reputation concerns

- CONS: Observations are not independent

- Everyone meets everyone

Stages of an Experiment

- Design

- The building blocks should meet research hypotheses

- Repetitions, Payment scheme, Choice task, Matching

- Useful to discuss the design in internal seminars

- Recruiting of participants

- Usually participants are registered in a database (e.g., ORSEE)

- Participation to the experiment is solicited via emails or posters

- Conductance of the experiment

- Participants are gathered in a room (laboratory) and asked to make their choices

- Computerized or paper & pencil

- Data analysis

- Data are collected, organized and analyzed

- Focus on hypotheses testing

State of the Art

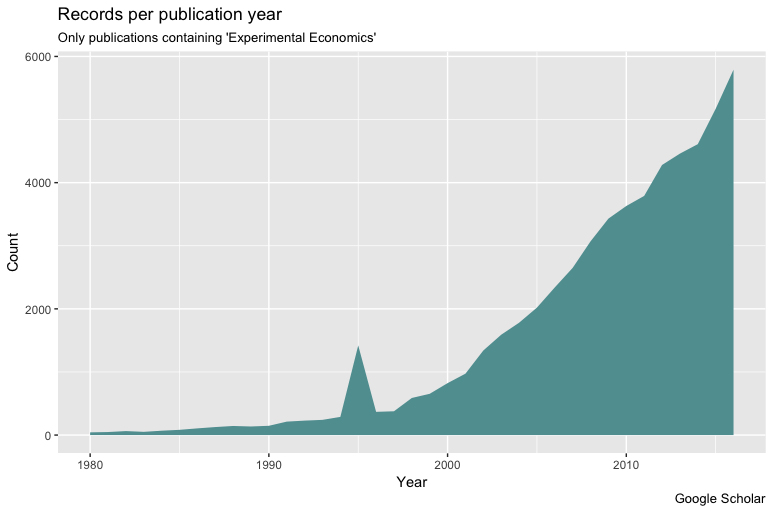

Experimental Economics

- The number of publications referring to "Experimental Economics" has grown rapidly

Replications

- Experiments can be replicated (unlike field happenstance data)

- Most journals publish details about procedures and instructions

- Reproducing results is key to the credibility of the discipline

- Two studies present evidence of reproducibility of results

- In economics (Camerer et al, 2016)

- In psychology (Open Science Collaboration, 2015)

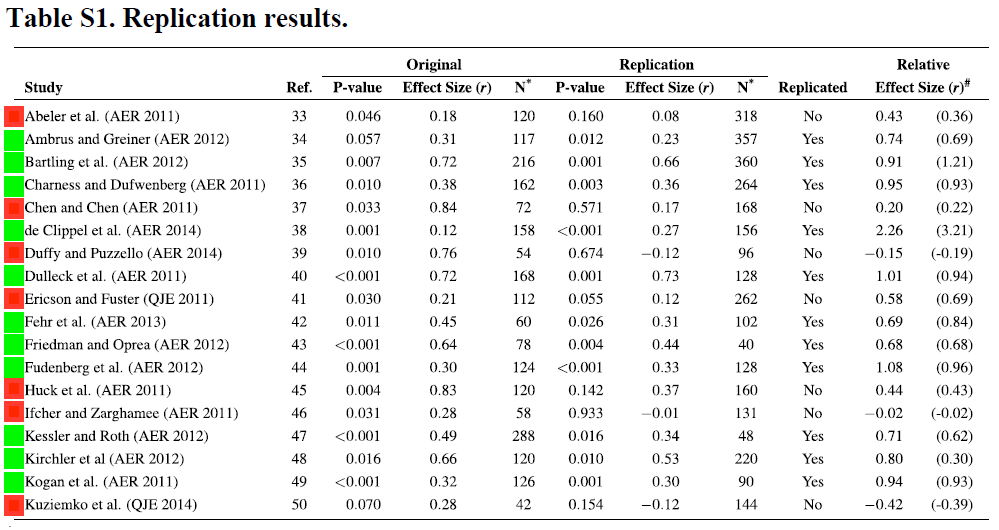

Replicability of Economic Experiments

- Camerer et al. (2016) autonomously replicate 18 experimental studies published in AER and QJE

-

- Significant effect like the original in 11 out of 18 (61.1\%)

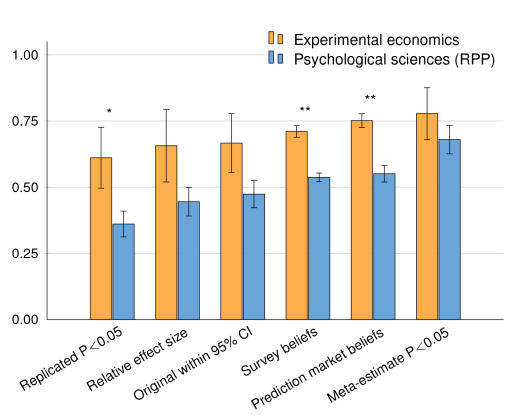

Replicability of Economic Experiments (ii)

- Camerer et al. (2016) compare their results to those of Open Science Collaboration (2015) in Pychology

-

- According to Camerer et al. (2016) the better relative performance of Economics may be due to

- Monetary incentives and truthful information

- Instructions and data are routinely published with the paper

-

Evaluate 100 studies and replicate

36\% of them